Infinite Worlds

AI, IP Composability and Autonomous Agents.

Hello!

A year back, me and Sid wrote this piece on how the creator economy in gaming is coming together. In the year that followed, we took minority stakes in four gaming ventures. One of which has a little over 100 million installs. But the unit economics of gaming itself has evolved since then. So we revisited the theme, and this article is what has come of it.

Any sufficiently advanced technology does not look far too different from magic. This piece, has a lot of tall claims. As much as possible, I have given live instances of functional products. Where products are not live, I have quoted from founders building at the frontier and attributed to them.

Think of it as a primer about how the web is evolving in response to the changing economics of AI.

We use gaming as a back-drop to explain how autonomous agents will embed themselves in our activities in the article. We took that approach because games are contained environments where experimentation has a low cost. The arguments made in this article, can be extrapolated to the frontiers of finance, labor coordination and niche verticals we barely thought of. Especially, in emerging markets.

If you are a founder exploring those themes, we’d love to talk to you. Use the form below to get in touch.

We are also looking to add one more analyst to the team at Decentralised.co.

More details, in the button below.

Back to the article…

In 1950, Alan Turing wrote a paper titled 'Computing Machinery and Intelligence'. He started by asking a simple question: Can machines think? Throughout modern history, there have been attempts at creating machines that mimic human intelligence. The original Mechanical Turk was a machine that pretended to play chess with a man hidden underneath it.

While steam engines and electricity powered our machines, we had few avenues to replicate human consciousness. Turing predicted that by the year 2000, when computer memory had reached 100 MB, we would have machinery that mimicked human intelligence.

The Turing test – named after Turing himself – seeks to detect if a machine can pass for a human in conversation. I write these words on a machine with 32 GB of RAM - roughly 320 times what Turing imagined machines would have in the following decades. I used ChatGPT three times today, and some of the generated conversations seemed like they could have been with a real human.

Today's issue explores a simple question: Can autonomous agents replicate human behaviour for fun and profit on blockchains? And if they can, what would it mean for virtual economies such as the one in games? I know these terms may sound difficult to comprehend. What even is an autonomous agent?

Stay with me, and we'll go on an exploratory journey involving non-player characters (NPCs), DeFi, games, and unified economic layers for artificial intelligence (AI) interactions.

NPCs to Humans

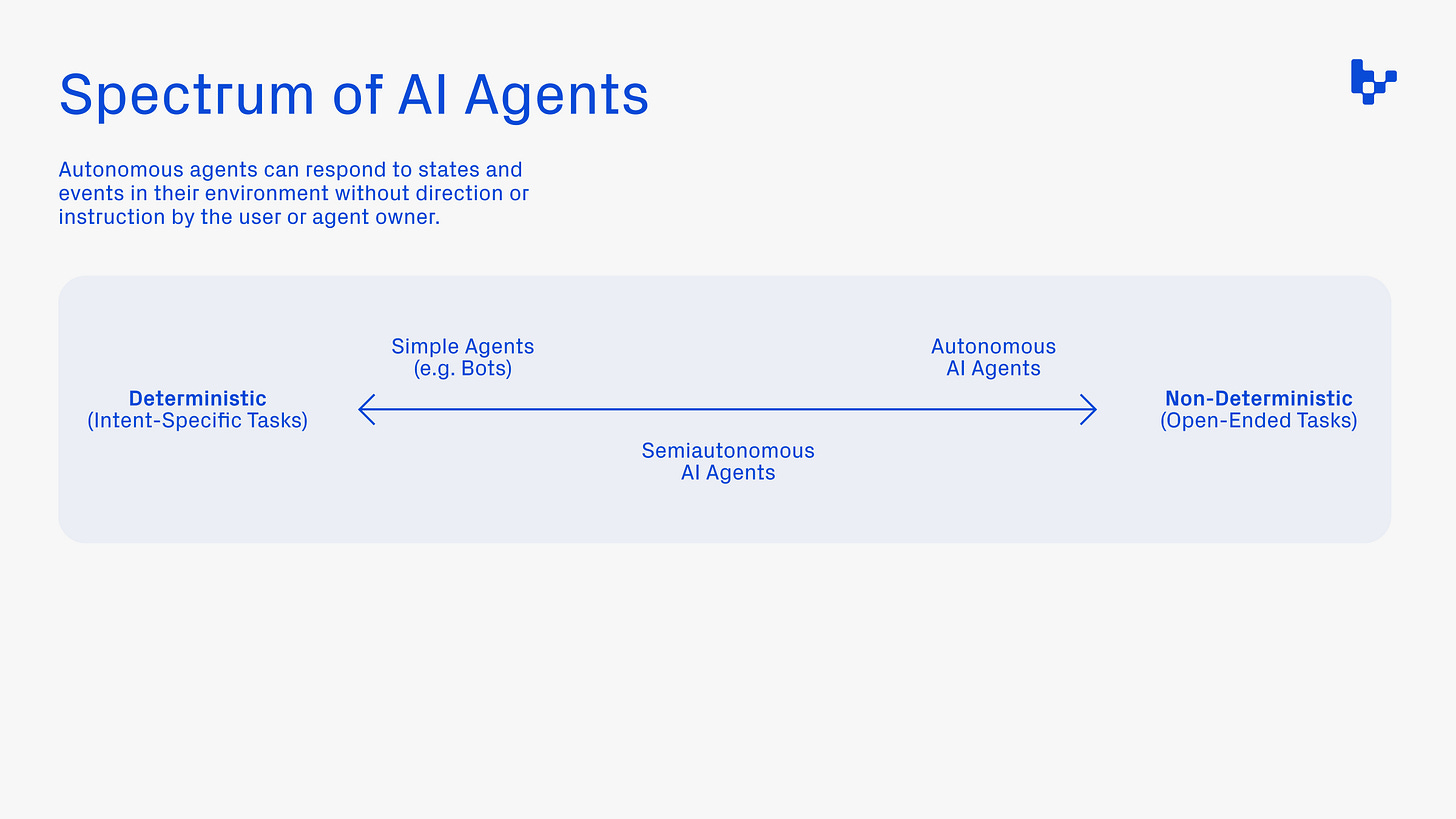

Let's start with gaining an understanding of what autonomous agents are. Bots account for over 45% of all internet traffic today. These rule-based entities complete functions like tweeting a certain hashtag or booking a flight right when it becomes available. They usually excel at one or two functions.

On the other hand, an autonomous agent is an entity that behaves independently in response to the environment it finds itself in.

The best example of an autonomous agent is that of an NPC in a game like Grand Theft Auto V (GTA 5). These characters vary widely in how they interact with a gamer.

For instance, in Red Dead Redemption, the NPCs treat you differently depending on whether you have been killing other characters in the game. Similarly, Assassin's Creed's NPCs take moralistic stances depending on the choices you make in the game. The variation of NPCs adds to each player's experience. Each time you play these games, you end up with a slightly different experience in open-world games.

In linear storylines, you can only experience the game once. You more or less know what is to be expected. But in open worlds, such as the ones in GTA 5 or Assassin's Creed, the variations with which NPCs behave add to the randomness of the experience. The unpredictability makes it a unique story arc each time you play the game. Studios recognise this.

However, there are limits to which humans can design NPCs. For instance, if you have a thousand NPCs in a game, you may have to find mechanisms to design each one's behaviour, clothing and speech. For each character, that adds to the expense.

This difficulty is partly why games like Red Dead Redemption and GTA 6 have a decade between their new releases. Last year, Ubisoft tinkered with using AI for designing its NPC character scripts. Generative AI would create scripts that dictate how a character responds. A human scriptwriter would then work out how the character responds to certain behaviours within a game.

In such a model, the time taken to generate new characters is reduced using AI. The studio maintains that humans are still used to design the core story arc of their games. But while story arcs and character scripts may be far-fetched, world generation using AI is quite real.

The most prominent example is Microsoft Flight Simulator. The game allows players to emulate flying hundreds of aeroplane models around the world. To recreate the world from a pilot's view, the team behind the game mapped out the world using satellite imagery. They then used AI models to make 3D models of what the world looks like. The game is sophisticated enough to replicate storms within its world in real-time using weather data. All of it runs on Azure AI today.

This interplay between gaming and AI is not new. As early as 1997, Deepmind was trained to play chess better than humans. Twenty-five years later, the quest has become making bots that increasingly play like humans. Where Deepmind would play with an obsession to beat humans, machine learning models like Maia would obsess about mimicking human behaviour within the game.

The model was taught based on some ~12 million human games at different levels. There are nine levels of modularity in the model, so a lower-ranked player would find someone that mimics them. If you'd like to play with it, give it a go here.

Why does this matter? If the objective is entertainment, then you need the model to mimic human behaviour. If the objective is competition, you likely need a model that always wins. A few startups have been making attempts at replicating the behaviour of human gamers using video footage. Their claim, is that simply having footage of gameplay from CS:GO or a similar game, is sufficient to replicate a gamer’s natural playing style. In theory, this would mean I should be able to replicate a friend’s style of playing FIFA or GTA 5.

Since gamers tend to have Twitch streams with direct clips of their gameplay, it may not be far-fetched to imagine a world where gamers can monetise themselves for just their ability to play or their playing style. In such a (hypothetical) model, a gamer ranked incredibly high in Fortnite could teach a model how to play similarly to them and charge micro-transactions for other gamers to play against the AI-powered version in a game.

For studios, the upside would be having a richer gaming experience than simply using NPCs in their game. My understanding is that indie developers stand to benefit the most from the convergence of these factors. For what it’s worth, this is a tall claim and we have not seen live instances of functional agents that are as good as human gamers yet.

But before we go there, it is worth exploring how AI has played a role in on-chain transactions today.

Intelligent Chains

In September 2023, half of all transactions on the Gnosis chain were carried out by an AI agent. The complexity of tasks these agents can perform has evolved drastically. A good benchmark to understand this is Mason Nystrom's breakdown of the spectrum of AI agents, as shown below. Early-stage agents could perform simple functions, such as notifying users of a transaction to a particular wallet or querying a user's balance.

These agents examined on-chain data and provided an output. Recently, with the arrival of Telegram bots, retail users have gained access to more sophisticated bots. These bots can track on-chain activity and notify users of new pools launched on Uniswap. They can also detect if a tax exists on token transfers and assess the probability of a token being a scam.

Users of Telegram bots can allocate small sums to these bots in hopes of being early to a token. Projects like Onthis.xyz enable any user to run intent-centric transactions. You feed a bundle of instructions – including swapping asset X for Y on-chain ABC – and it generates a wallet for you. Whenever you send assets to the wallet, it performs the required task.

These bots examine on-chain data and perform specific tasks. They are restricted to the functions they serve and require human input. According to Mason, the gradual evolution of agents would be towards eventually being autonomous. These could be agents that predict how the yield on a particular DeFi pool would evolve over the next six months or automatically track the best pools to park capital.

Their abilities are not restricted to querying the current market conditions for yield. Instead, they can predict market conditions and act accordingly.

Two products I saw building in this direction are Mozaic and Noya. Both claim to assist with cross-chain yield. They optimize to reduce transaction fees, bridge across networks, and find liquidity to generate yield on idle assets, all on their own. That is to say, an autonomous agent will be able to move your idle assets around and find the best spot to park them for the highest interest rate.

I could not vet how much more efficient Mozaic or Noya is when compared to traditional fund managers. However, I could tinker with a tool called Spectral Labs. This is a no-code framework that allows anyone to create autonomous agents using NLP. So you can use text on Syntax, their chat-based coding tool, and expect to receive audited code that is ready for deployment.

What we are seeing on-chain is a blend of rapidly evolving intents (use of NLP and similar user gestures to initiate transactions) and tooling that enables users to conduct transactions without being present. These tools can allow users to quickly invest in new tokens, buy into an index of meme coins, or rotate out of certain assets when specific on-chain parameters are met. Remember when I mentioned that half of all Gnosis transactions happen through an agent? A key player powering that transition is Olas Networks.

Olas provides a bundle of tools that allows users to create and manage their own autonomous agents. One of the applications I saw that hints towards how this ecosystem would evolve was ElCollectooorr. This tool helps create vaults into which a group of friends can deposit ETH. Whenever an artwork drops on ArtBlocks, the agent collects it on behalf of the vault's users. When the vault closes, users can decide what to do with the assets in the vault. It can be liquidated for ETH, or users can receive the assets themselves.

This is different from a group of users having a multi-signature wallet. A quick observation of how present-day DAOs work explains why human intelligence, on its own, does a poor job of coordinating on-chain resources in response to market events. When a group has a multi-signature wallet, it needs to make collective decisions through votes on which pieces of art are collected. An agent, in comparison, could make quicker decisions and constantly update itself in response to changing market conditions.

Humans take time to reach a consensus and are influenced by the socioeconomic dynamics of participants involved in the decision-making. Algorithms don't care.

ElCollectooorr claims that their agent is better equipped to pick winning collections. If you were creating a simple agent, you could simply mint ANY artwork released on ArtBlocks; but an intelligent agent could ideally determine which collections are worth minting and which are best avoided.

That last bit – the intelligence – does not reside on-chain today. Instead, ventures have been using cryptographic primitives to incentivise and validate autonomous agents. 'AI x Crypto Primer' by Mohamed Baioumy and Alex Cheema breaks down this phenomenon quite well.

The following is a description of the Rockefeller Bot from their primer.

Rockefeller Bot is a trading bot that operates on-chain. It uses AI to decide which trades to make, but since the AI model isn't run on the smart-contract itself, we rely on the service provider to run the model for us, then tell the smart-contract what the AI has decided, and prove to the smart-contract that they are not lying. If the smart-contract didn't check that the service provider wasn't lying, the service provider could make harmful trades on our behalf. Rockefeller Bot allows us to prove that the service provider is not lying to the smart-contract using ZK proofs.

Here, ZK has been used to change the AI Stack. The AI Stack needs to adopt ZK techniques, otherwise, we could not use ZK to prove what the model decided for the smart-contract. The resulting AI model, which has verifiable outputs because of ZK techniques, can now be queried from the blockchain on-chain, meaning that this AI model is used inside the Crypto stack. In this case, we have used AI models within the smart-contract to decide trades and prices in a fair manner. This was not possible without AI.'

We'll be writing extensively about zero-knowledge proofs in a future article, but think of it this way: Each time an LLM model has an output (which suggests you buy or sell something), there are mechanisms to vet the service provider's identity.

These mechanisms suggest you conduct a certain action (e.g. you could validate that RenTech suggested you buy 10,000 WIF tokens). This action occurs without the source itself being shared (i.e. RenTech would not share the proprietary models it used to come up with its decision).

As I said, in such a model, the data and the model itself remain off-chain, but cryptographic primitives are used to do the following:

Validate a model's output so a third party doesn't provide maligned outputs (e.g. a competing hedge fund could suggest you buy Bonk instead, leading you to losses, and claim that RenTech suggested it)

Incentivise third-party specialists to run their models on said data (Numeraire is an early instance of such a model working)

Numeraire, for example, provides standardised data sets with which thousands of data scientists compete to give outputs in a data science tournament. The scientists stake their Numeraire tokens to show skin in the game. That is, the predictions of someone with more NMR tokens to their name are weighted more heavily. If their predictions are consistently right, they receive more NMR tokens.

If it is wrong, they are slashed – that is, they lose their own NMR tokens. According to their dashboard, Numeraire currently has about 1,218 data scientists competing in their tournament.

When taken at large scales, you have open marketplaces where multiple people can predict the pricing of a commodity (like NFTs). One place I saw this being live and functional was Upshot. When building financial products for commodities like NFTs, you need accurate price feeds. Sometimes, these price feeds are required to be future-looking. That is, you must predict the price an asset may trade at a few weeks out to offer a loan against it accurately.

Upshot uses ML models to perform this. But there is no way to vet if the data really came from their models. An employee at Upshot could (hypothetically) switch pricing for an NFT feed and benefit from it. ZKPs offer an alternative that protects privacy, but they previously cost a lot to conduct at scale. According to Modulus, a single appraisal on Ethereum's mainnet can cost nearly $1 million. That presumes the model runs directly on Ethereum.

You can read more about how that works in their paper titled The Cost of Intelligence.

Modulus collaborated with Upshot to reduce the cost of doing so drastically. Anyone using Upshot's feeds can now verifiably claim the price feed came from Upshot. As of November last year, they were doing nearly 10k AI-powered, zk-verified appraisals every hour on Ethereum. You can read more about how they do it here.

This linkage between user-owned data and third-party models is becoming common across use cases. Earlier this year, Zama, a provider of Fully Homomorphic Encryption (FHE, which lets you run a computation on your data without passing the data itself to a third party), raised close to $73 million. Its focus varies from on-chain credit scoring to predicting medical ailments from your health data. You can see a live implementation of its ability to parse sentimental data from a wall of text here.

I am taking you through these examples because we are seeing a confluence of factors.

Firstly, the evolution of FHE will allow users to pass data on to models with far higher privacy than previously possible, as shown by Zama.

Secondly, the cost of verifying the output of these models (using tools such as Modulus) has decreased exponentially. You can run these verifications tens of thousands of times without costs adding up, as shown by Upshot.

Lastly, on-chain bots have sufficiently evolved to be able to make transactions on behalf of users without said users actively monitoring data, as shown by Rockefeller Bot and ELCollectooorr.

There is a final element to all of this: the cost of conducting transactions on-chain, which has also drastically decreased over the years. Networks such as Solana and those based on EVMs such as Base (no pun intended), allow users to conduct hundreds of transactions for a single dollar.

In an economy in which the cost of conducting transactions is declining rapidly and the ability to parse data and transact (on behalf of users) is improving exponentially, we will see bots become an increasingly common part of day-to-day transactions.

According to CNBC, close to 80% of trades on the US stock market are conducted by bots. Bots have become commonplace even in purchasing flight or concert tickets. It appears that the arrival of self-transacting bots in a marketplace marks its evolution, as we have seen with liquid assets on centralised exchanges.

Were it not for the hundreds of bots trying to arbitrage across pairs (for BTC, USDT or ETH) across exchanges, we all might have been worse off in terms of pricing. We are seeing this now with autonomous bots that can conduct transactions on-chain.

One market in which AI's influence in the context of user-owned agents merges with its influence in the context of self-transacting wallets is gaming. Let me explain how.

Intelligence for Virtual Economies

I thought much about what makes an agent different from an in-game character. In the context of Web3 games, an agent can have shared state memories. So, if an agent — a character owned by a gamer — notices that a highly skilled player has purchased a certain avatar they are competing against, the agent could evolve its gameplay accordingly.

For this to happen, the agent must have access to the marketplace where avatars are being transacted and be able to evolve its skills in response to the gamer being competed against. For such an economy to be functional, the whole stack must be integrated.

That is, IP, games, marketplaces and the agents themselves must have a common linkage. It seems to me that this is what Parallel is working towards. They are not trying to replace large AAA studios (like Ubisoft) today. They are creating unified ecosystems where agents can be used for transacting in virtual economies. I'll explain what they do shortly. But let's zoom out for a bit and see what's going on here.

Any time a new technology emerges, it is usually a confluence of factors that make it mainstream. In the early 2000s, Flash-based games were a smash hit on the web, but console gaming was a better experience because of how slow the internet was. It was only when mobile devices took off that micro-games (such as Angry Birds) became popular.

That required mobile devices to become more affordable, costs for mobile internet to reduce drastically, and touchscreens to take off on phones. A similar confluence of factors may be happening with Web3 gaming today.

In 2021, a gamer coming to Web3 gaming had to deal with the high fees of moving assets on Ethereum. The person would also have to put up capital to buy NFTs before they could play a game like Axie Infinity. The experience was transactional from the get-go. By nature, the closest market for the product to expand into was play-to-earn.

Only users who knew they could make a return on their investment would invest money into a new, primitive game. That ecosystem is vastly different today because a critical mass of users have aggregated around P2E, and community has become a larger hook for gamers than merely making a quick buck.

When games merge with AI primitives, we may be witnessing a confluence of new technologies all over again. While large studios (like Ubisoft) have much to lose by embracing generative worlds, newer ones like Parallel may embrace them due to their ability to offer better experiences to their users. The novelty that comes from having AI-native NPCs or agents that can be trained for profit can drive Web3 gaming into the mainstream.

In an ideal world, Web3 native gaming and AI will switch the category from one that is primarily transactional to a more leisurely ecosystem. It seems like Parallel is building on this opportunity subset.

Parallel is currently best known for Parallel TCG, their card-based game. But backing the game is an ecosystem run by the Echelon foundation. It is a non-profit designed to provide infrastructure, IP and know-how to a collective ecosystem. PRIME is the token they use to govern the ecosystem. There is a separate studio that goes by the name of Parallel Studios that develops games. Parallel TCG went live in July 2023.

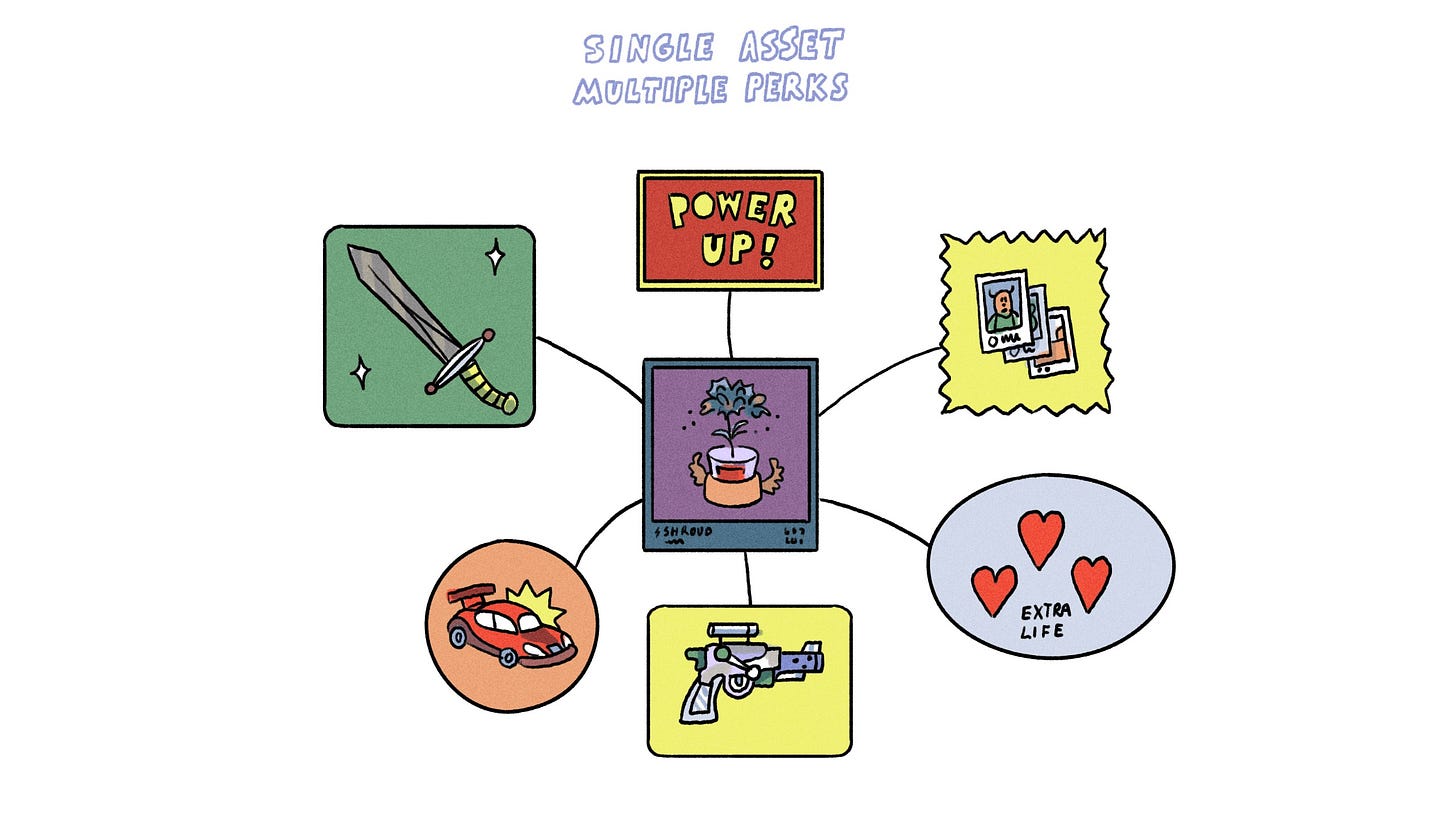

They are one of the earliest instances of what composable IP in the context of gaming looks like. For instance, Parallel Avatars is a collection of 11,000 NFTs. The initial benefit of having these cards could simply be receiving early access to a game. But in the future, the plan is to use it in game lore.

One place this is possible is with a second game released by Parallel Avatars named Colony, a strategic survival simulation game where AI-based agents compete to survive.

The way it works is that a game could simply integrate Parallel's Avatars (the NFTs) into any newly launched game to acquire the 2,300+ users that own Parallel Avatars today. So, what you effectively have is IP composability. The game's developers could link certain skills to the characters based on the metadata from the NFT itself.

Last week, they announced Wayfinder – a bundle of tools for developers to issue and manage agents. The whitepaper is a rabbit hole in itself, but it is quite telling on how Web3 games are evolving. They imagine a future where AI agents — your in-game characters — take on-chain actions (like the Rockefeller Bot does) without user input while users are away from their screens.

Parallel's approach seems to be bundling several services that are interoperable. They started with Avatars, which gave an initial user base, and then they released TCG and Colony — two separate games that can feed off those NFTs. Now, they have released Wayfinder — a tool for building agents within game economies.

Wayfinder provides the tooling for game studios to create in-game agents to perform on-chain activities. For example, if an agent finds an arbitrage opportunity in a game, they could use Wayfinder to make an on-chain swap to take advantage of the opportunity. (You can see an early demo of the product here.)

Nim Network has been similarly aggregating a number of prominent firms building at the intersection of autonomous agents and gaming. They went live with a dymension based roll-app earlier this week.

This transition towards blending AI and games is not a Web3 native phenomenon. Even outside the world of blockchains, games and AI have been steadily blending together. The market map below is an exhaustive list of today's tools that blend gaming and AI.

You can realistically use AI to generate a world using Blockade, add music to it through Suno, use Luma Labs to model 3D assets, and fill the world with NPCs powered by Inworld.

We are currently seeing a drastic reduction in the cost and effort required to create virtual worlds. Social media enabled everyone to be their own standalone media outlet. AI will make it possible for everyone to create their own standalone games.

Does this mean our traditional perception of games is about to become redundant? Probably not. Social media did not upend the traditional movie industry. What will happen instead is that human attention will now be split across a multitude of games that provide unique, on-demand experiences. These updates could be linked to a user's real-life variables, such as geographic location or on-chain footprint.

Games that constantly update themselves would, in turn, attract more users for longer periods, as there is always something new to look forward to in them.

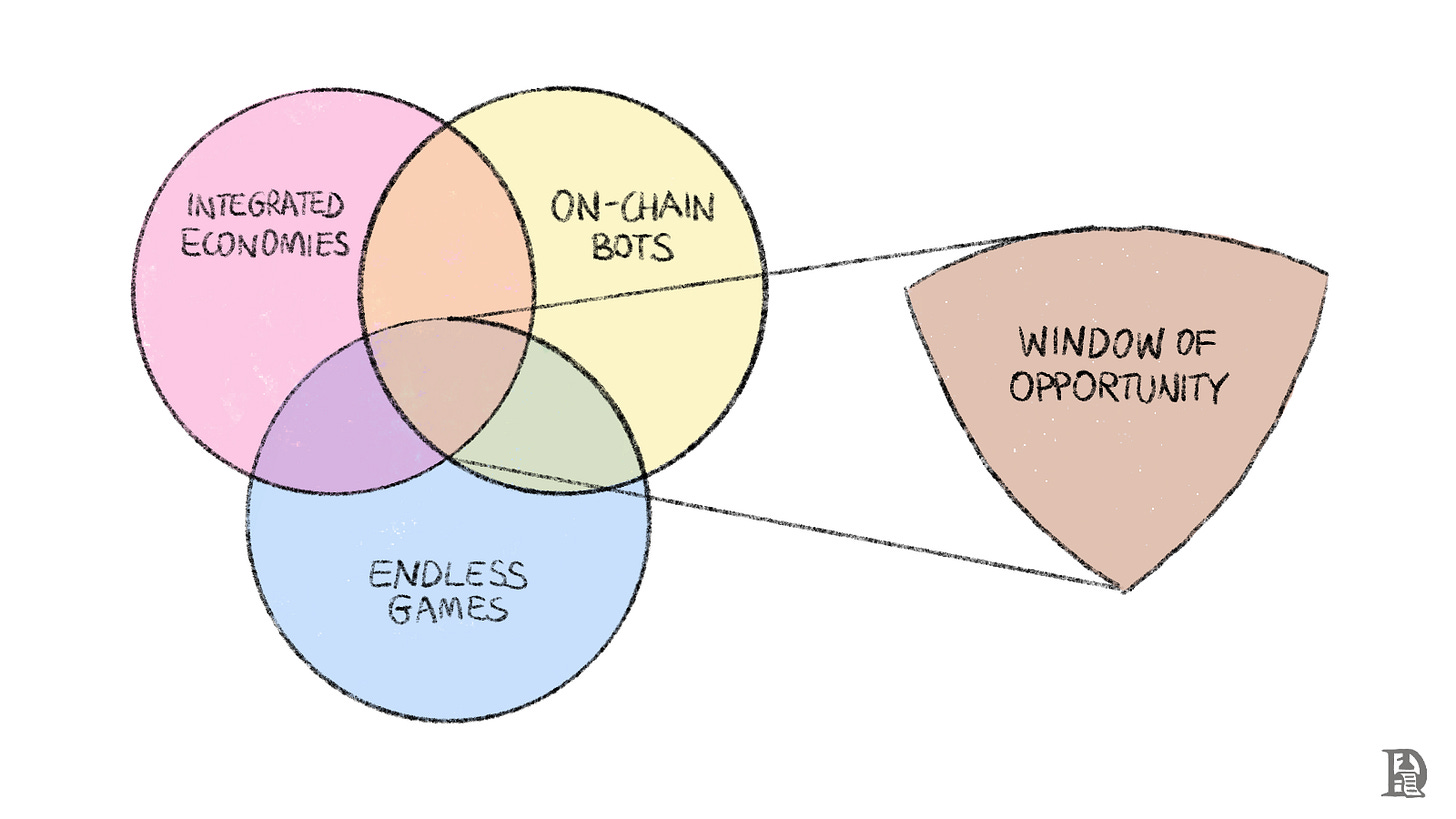

A16z dubs this category of games as never-ending games. What we really have is a confluence of three factors.

Firstly, there is the ability to bring off-chain intelligence to on-chain bots using tools like ZKML.

Secondly, there is the explosion of virtual worlds thanks to the arrival of generative AI.

Thirdly, there is the emergence of integrated ecosystems such as those of the Olas network and Parallel, which facilitate the tooling required to create agents that interact highly efficiently.

Part of what would empower this transition towards the use of agents in gaming is token bound accounts.

I have written about token bound accounts previously. Here's a quick refresher of the article for those just hearing about it now.

In the context of identity, these assets are currently used in two ways. NFTs are used to attest ownership by simply checking wallet balances. Using NFTs to prove identity would mean a person was either wealthy enough to acquire it (with capital) or skilled enough to mint it early on when the NFT was released. An SBT, on the other hand, cannot usually be acquired by capital alone. By nature, they are nontransferable, so users have to put in effort and time to acquire them.

But what if you had a mechanism to combine SBTs, simple assets (like stablecoins) and NFTs into a single standard and gave it the ability to transact? That is ERC-6551. In this model, you convert an NFT into a wallet. A user's action can add layers of assets to the NFT. These layers can be metadata that is hosted on centralised servers or assets that are held on-chain. A user with the TBA can move single assets (like stablecoins) or transfer the TBA, along with all of the assets held by it.

ERC-6551s are basically NFTs with wallets. The image below shows how this works in practice. You could start with a simple NFT like Parallel's Avatar NFTs. As a game progresses, its abilities and resources would evolve on-chain. So if you were hunting for animals in one game and received +100 food, it could leave an imprint on-chain. When you log in to a different game with the same wallet, this information could be processed to enable a different experience.

What ERC-6551 allowed (before AI) was the interoperability of a single wallet (and its associated data) across games. So, if you build an AI native ecosystem, you can use the data (from an NFT) to recreate unique worlds and experiences. Remember how I explained the challenges of NPCs in the initial bits of this article? You can (hypothetically) recreate how a game's world looks or how characters interact with a gamer, depending on the characteristics of an NFT.

This may seem far-fetched, but the primitives needed to make this happen exist today.

For instance, Virtuals.so allows you to create, mint and monetise AI personas in digital worlds. You can 'teach' a character how to carry out certain skills like accounting, offering therapy, or being a co-pilot within a game. Similarly, Story Protocol allows you to build upon IP that can be programmed across mediums.

Story Protocol is working towards a world in which IP becomes a platform. You could use characters from Marvel or Pokémon within a game, so long as the original creators receive a portion of the revenue. In the past, IP-as-a-platform approaches struggled to take off because you did not have mechanisms to trustlessly validate the use of IP across the web. I could download Marvel characters and run them through an LLM model without the studio suing me. The studio itself had no incentive to let me do so.

However, the studio does have such an incentive if a distributed ledger (like a blockchain) makes the process of licensing the IP and splitting the associated revenue with the studio as ubiquitous as downloading an image.

NFTs were a preliminary approach to this issue. You could realistically 'validate' that you own a BAYC character you printed on your t-shirt. But even there, the challenge was that the original creators (Yuga Labs) had no mechanism to get a portion of the revenue these characters generated.

Side note: As far as I understand, the best use of BAYCs was in this Eminem video.

Blending such agents with virtual economies can further facilitate the flow of value. For instance, a group of users can come together to create agents that are extremely fun to play against. They could then offer these agents 'on lease' to a third-party game developer to integrate with a game and earn some of the revenue generated.

In such a model, the 'NFT' (or ERC-6551) does not host the skills it needs to perform these functions on-chain. They will be hosted (and developed) off-chain. However, the validation of who owns these skills will occur through cross-referencing whether the wallet has the NFT required to access the skill.

Let me explain in simpler terms. Remember ElCollectooorr, the AI-based art blocks collector I mentioned initially? Presume you have an agent trained using AI models to be highly proficient in trading on behalf of a user. A developer could grant a particular NFT holder access to this agent at a certain game level.

In such an instance, the model and data used to train the agent are not directly connected to the NFT. They remain off-chain, but the user can create profit via the agent's interactions with multiple in-game markets. Perhaps the developer could even charge a portion of the profits generated from these agent interactions for facilitating this service.

There are two products in the wild where you can see the confluence of such technologies. One of them is Alethea’s live agents. These agents, are capable of producing emotive faces with text input. The other, is that of Polywrap’s AutoTx agents. Powered by Biconomy’s account abstraction SDK, Polywrap’s agents are able to conduct basic functions such as sending tokens or conducting a swap with just conversational inputs.

In the future, we may have a marketplace where such agents are leased out to users.

Such a system gets even more interesting if you can scale it to multiple players, as this enables the contribution of lore, story, 3D assets, or skills to a developer's models for usage within a game. In the past, due to the skills needed and the standardisation of inputs required for a game, it was impossible to have gamers give inputs. But generative AI collapses that skill gap.

So, hypothetically, you can have gamers provide elements of story, lore, 3D assets, or specific skills for usage in an open world and have economic value travel both ways. Most importantly, a gamer could contribute to have added perks within a game. The “motive” to contribute to such models switch from making an earning to status within game economies.

That is, developers could use it within a game, and gamers could get either platform rewards for it or a portion of the revenue generated through it.

In such a model, the benefit for the user will be the ability to have an ecosystem of dynamic games — ones that can evolve rapidly as they are not being developed by individual developers but rather an open ecosystem of contributors. For developers, the upside is tapping into an open ecosystem that blends IP, games, and economic value transfer.

I think such an approach has broader implications for the industry too. Currently, AI is restricted to a handful of games released by a few developers. Their users are primarily driven by play-to-earn economics. That is, games are required to be inflation-heavy (or speculatory) to retain users. The reason why Web3 games are throttled is the limitation on the number of games that can be released.

Play-to-earn will be replaced by contribute-to-earn in a generative AI world.

There could be users who are simply interested in arbing between economies — ones who are more aligned to developing and monetising niche skills. Or, there could be artists that profit from generating 3D models for games. In essence, we will witness multi-world marketplaces that incentivise a broader spectrum of users to engage with virtual economies.

Agent Economics

Web3 native gaming with embedded AI primitives expands the surface area for user personas of all kinds to interact with the industry. For instance, artists who voice characters or models that look at existing bodies of work and pretend to be a third party could be embedded in games.

It may seem far-fetched, but using publicly available bodies of work to replicate an existing person within a virtual economy is quite possible. For instance, Delphi allows users to clone themselves by feeding it text from PDFs, chat conversations, or videos. Presuming you have permission, it is not difficult to imagine Naval Ravikant or Ben Horowitz in a future iteration of a game.

However, that is entirely dependent on creators with large bodies of work willing to lend it out to an ecosystem.

A far more likely scenario would be one where individuals can contribute directly to a game. For instance, Fortnite had issues moderating gamers from making racist statements in AI-generated art. Historically, the belief was that a centralised team of moderators could help with moderation.

But as gaming goes global and cultures intertwine, you will need distributed labour with sufficient context assisting with such things. When Meta took off (in the pre-pandemic years), a huge part of its operation was coordinating moderators in foreign cultures. In a hypothetical world, it is not unreasonable to imagine a distributed labour force labelling in-game events.

Whilst these seem like hypothetical examples, one instance I found to be functional and live today was that of AIArena. The platform allows gamers to train their agents and compete with those of other gamers. In their model, each character starts with random parameters that dictate their behaviours and responses to specific actions. The game gathers data from users on how they play, which can then be used to replicate the player even in their absence.

These agents could, in the future, be embedded across games. The unit economics of building a game is evolving in several ways.

Firstly, by reducing the barrier to building games

Secondly, by making it easier to trace and incentivise users by providing IP, data and models

Thirdly, by enabling developers to have alternative approaches to monetising games

One thing to note here is that open-source AI has been undergoing a boom phase. Organisations like Meta and Alphabet have outsourced parts of their models over the past few quarters, but the data used to train these models are often not open-access. So, when it comes to consumer applications, indie developers often struggle to compete at their scale due to a lack of resources.

Approaches like incentivising users to contribute non-standardised data, could maybe give smaller developers a fighting chance at scaling like large organisations do.

When I first approached this piece, I was not sure if agents were a thing. While doing the research, I met and learned from multiple founders taking distinct approaches to building agent economies. One of them stood out in my mind.

It was the founder of Virtuals. The founder claimed that in the future, multiple people will train single-character agents that could then be leased for interactions. The clear pathway for such an agent would be as a video- or text-based character trained on inputs from multiple individuals. This exists here and now. You will hypothetically have a future where guilds are formed to train single agents. Hundreds of people will train agents that are then embedded across games.

Does that make individual gamers redundant? No. You still need gamers to play these games to train the agents. Agents simply make it possible for the gamers' playing styles to be replicated even when they aren't actively playing. It meaningfully disconnects time and labour rewards. Play-to-earn could become train-to-earn. This is a hypothetical that could play out in the next six months.

However, the larger implication for agent replication pertains to creators in the gaming realm today. One of the companies we spoke to manages 45 of the top 100 Twitch streamers. A challenge for Twitch streamers is the need to post daily streaming content as a mechanism for revenue. The firm we spoke to is helping these creators replicate their style of gaming and interaction within game economies.

It would be the football equivalent of getting to play with Pelé, Maradona and Ronaldo on the same field. Given the complexity of copyrights, monetisation, and the replication of prominent players in new games, it might take a year before it becomes a reality.

When we look at more complex functions like replicating worlds or using in-game assets, the markets just don't exist yet. But that does not mean the situation could not radically change. The market for games that use agents and AI will not be dominated by traditional studios. Instead, it will be led by indie developers looking for infrastructure and alternative mechanisms to monetise their virtual economies.

It remains to be seen whether Web3 native users can provide sufficient liquidity for the marketplaces that emerge on these games.

For now, we are curiously watching,

Joel John

Acknowledgements

Krishna Sriram from Zircuit had first discussed how generative AI would have an impact on gaming a year back with us. That conversation played a crucial role in directing this article.

Nate from Nim Network helped us understand how autonomous agents are coming to games.

If you liked reading this, check these out next:

- Introducing SentientMarketCap