The End of 'Just Trust Us'

Keeping secrets while making them flow

Today's article is a sponsored deep dive into Arcium, a platform designed to make privacy-preserving computing fast and practical. As businesses and decentralised applications handle more sensitive data, ensuring privacy without sacrificing performance has become a major challenge. Arcium aims to solve this by allowing organisations to securely process and analyse encrypted data, whether for financial transactions, AI training, or collaborative decision-making.

Acknowledgement: Thank you to Yannik, Julian, and the Arcium team for handholding me through technical explanations and thoroughly reviewing the article.

TLDR;

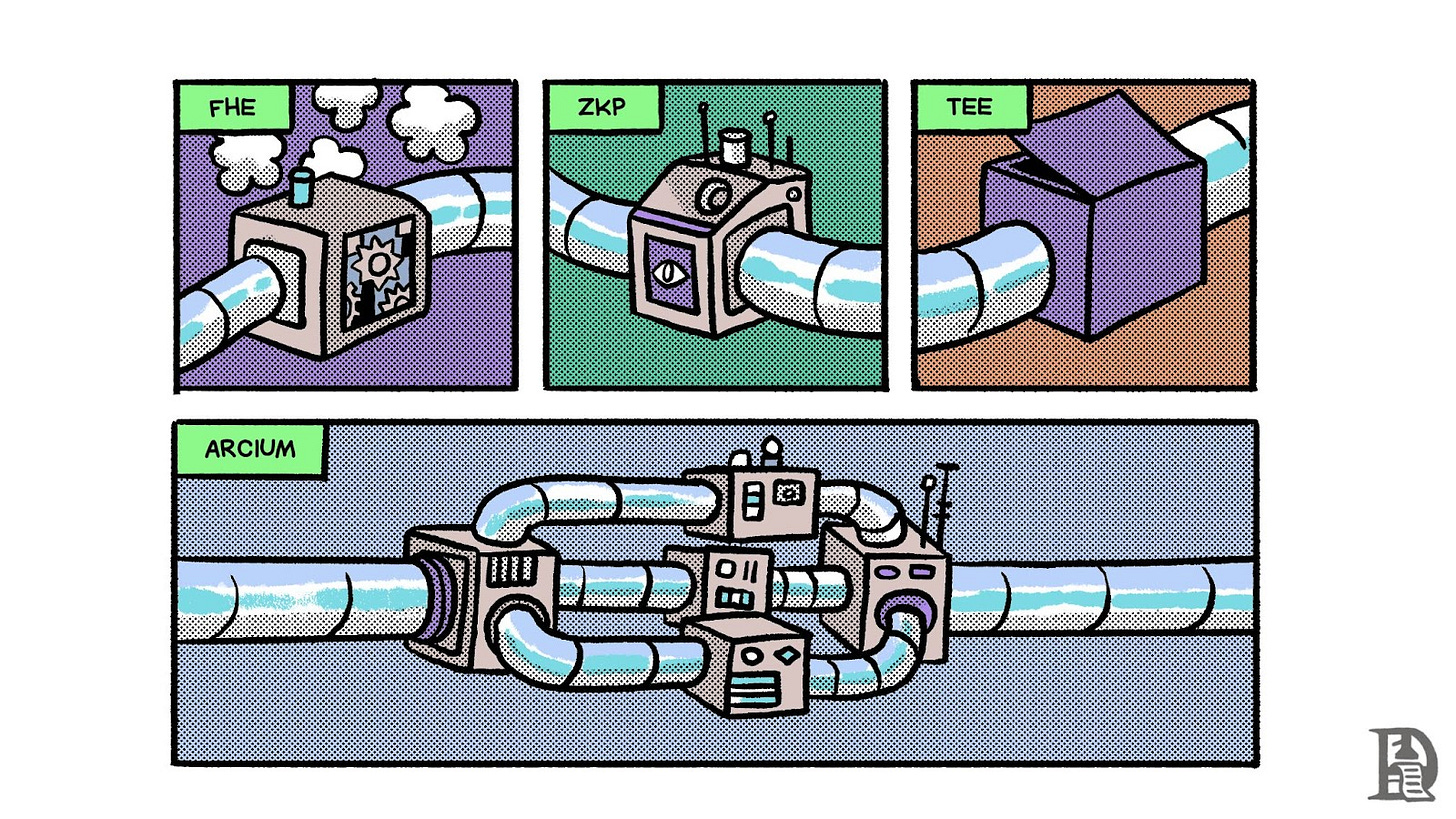

Confidential computing is critical for modern applications but existing solutions have major limitations—FHE is too slow, TEEs are vulnerable to attacks, and ZKPs don't work well for shared encrypted data.

Arcium reimagines privacy-preserving computation through a new Multi-Party Computation (MPC) architecture that requires only one honest node for privacy while using economic incentives (staking/slashing) to ensure reliable execution.

Arcium’s acquisition of Inpher allows faster cryptographic operations, a more efficient compiler, and hardware acceleration, making encrypted computing practical at scale.

The system achieves scalability through parallelised "MXEs" (Multi-Party eXecution Environments) that allow independent clusters of nodes to process encrypted computations simultaneously, making it 10,000x faster than FHE for many operations.

Real-world applications include preventing MEV in DeFi, enabling collaborative AI models and on-chain agents training on private datasets, and allowing businesses to analyse joint data without revealing sensitive information.

The developer experience is streamlined. Adding privacy is as simple as marking functions confidential with a single line of code, making enterprise-grade encryption accessible without deep cryptography expertise.

Hello,

For centuries, the Kingdom of Veritasveil upheld a peculiar tradition. Once a year, each noble family reported their wealth to the King's treasury—not for taxation but to gauge the prosperity of his realm. Yet, the proud and secretive nobles despised revealing their fortunes. Some understated their wealth to appear humble, others exaggerated to seem more powerful, and a handful bribed treasury officials to manipulate the records.

This created a riddle for the King: how do you uncover the truth when no one wants to reveal their secrets?

As the years passed, the King's frustration grew. He needed a clear understanding of his realm's strength—its wealth and prosperity. But each year, the reports he received were distorted by ambition and deceit. The stakes were high. If he could not discern the actual state of his kingdom, how could he prepare for the future? How could he lead his people wisely, secure alliances, and protect his borders without accurate information?

Meanwhile, the nobles saw their secrets as shields. They hid their vulnerabilities from rivals and even from the King himself. And so, the annual ritual became a game of half-truths, manoeuvres, and veiled threats—a kingdom shrouded in illusion.

The King’s advisors, desperate for a solution, eventually stumbled upon a novel idea: what if they could calculate the total wealth of the realm without requiring any individual noble to reveal their fortune?

They devised a system in which each noble would secretly add a random number to their wealth. This "scrambled" value would be passed to the next noble, who would add their own wealth along with another secret number. The process would continue until all the nobles had contributed their scrambled amounts. In the end, the King would receive a sum that contained all of the secret numbers alongside the combined wealth of the nobles.

To determine the true wealth of his kingdom, the King needed only to remove the effect of the random numbers. Since each noble had recorded their secret number, they could communicate it anonymously. It would allow the King to subtract them from the total sum. This way, the true combined wealth of Veritasveil was revealed, without any noble having to disclose their individual fortune.

Today, financial markets face a similar challenge as our imaginary Veritasveil’s King. When a major pension fund needs to sell a million shares of Apple stock, using public exchanges would tip off high-frequency traders who could move prices to their advantage, costing them millions of additional dollars. This is why "dark pools"—private exchanges for large trades—were created. Designed to avoid front-running and price manipulation, dark pools became the go-to solution for institutional investors seeking confidentiality.

Meanwhile, the irony is hard to ignore. Today, dark pools handle the majority of the US trading volume, but they aren’t truly "dark". Major banks routinely exploit the very system meant to ensure privacy, profiting from privileged access to client trades. There are also other avenues of manipulation, such as the owner of the dark pool changing the order of transactions. The SEC has charged Barclays and Citi for dark pool violations.

When dark pools cannot be verifiably dark, what is the solution then? The answer is similar to what the King’s men arrived at but with a modern twist.

The same solution that helped Veritasveil can be used in the modern world through a concept known as secure Multi-Party Computation (MPC). Just as the nobles added secret numbers to keep their individual wealth hidden, MPC allows multiple parties to jointly compute a result—such as the total value of a set of trades—without revealing their private inputs. Each participant keeps their data encrypted, but the group can still arrive at a useful result. In financial markets, this means conducting large trades securely without leaking any information that could be exploited.

The combination of cryptography and secure computation offers a glimmer of hope in this timeless quest. As the Kingdom of Veritasveil once struggled, so too does modern finance. Perhaps, this time, the tools to unveil the truth without betraying secrets are finally within reach.

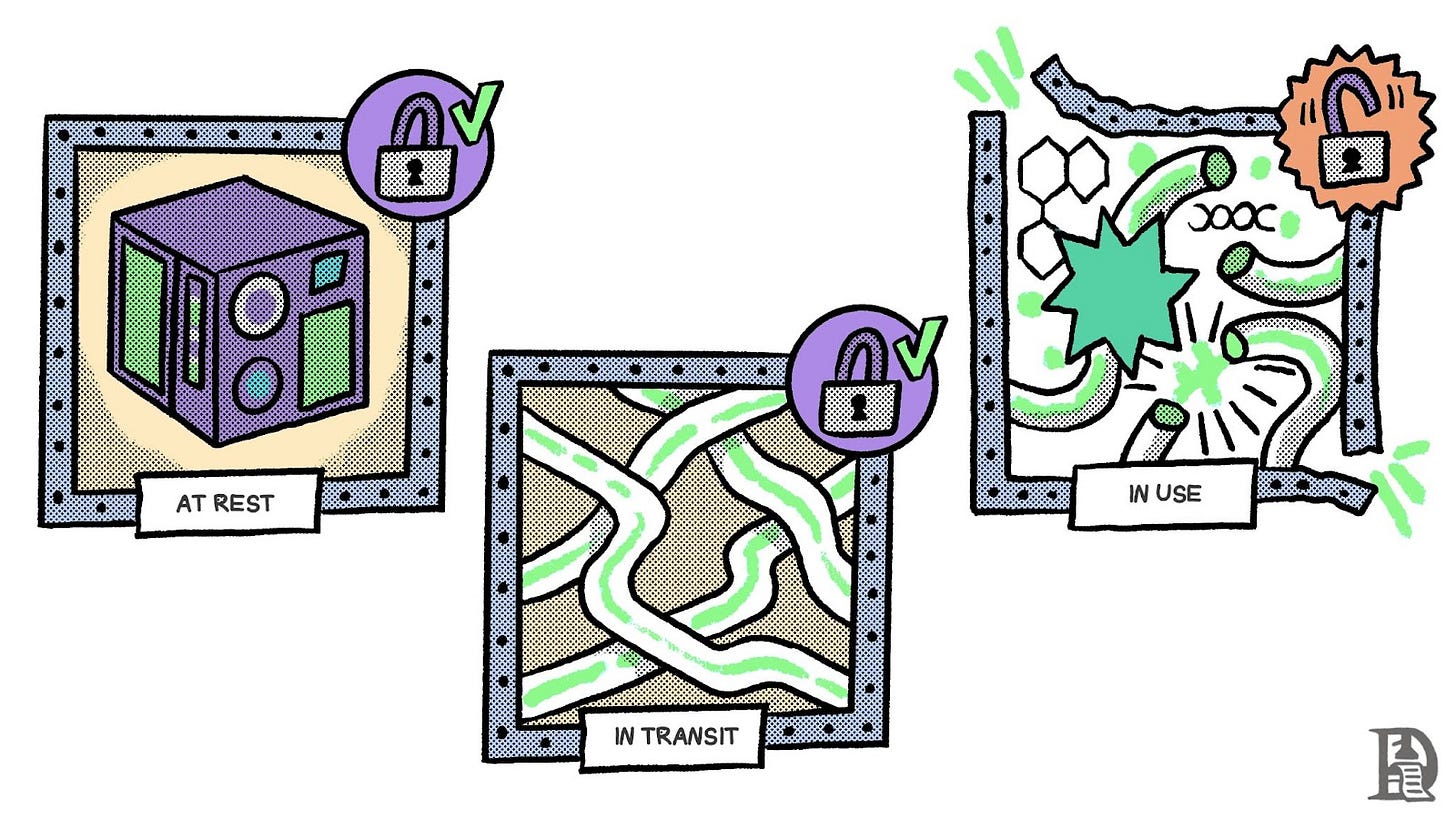

The key to secure computation lies in understanding the different stages of data. And ensuring privacy in each phase. Data or information is in one of three stages: at rest, in transit, or being used. At rest means that it is stored somewhere. In transit means that it is travelling through networks. And when it is being used, it means some computations are being run on it.

So far, we have successfully kept data encrypted while being stored and in transit. But, during computations, data becomes vulnerable to leaks, limiting where and by whom computations can be performed.

In this article, we explore the landscape of solutions that enable the use of data without decrypting or revealing it. We examine technologies such as Zero-Knowledge Proofs (ZKPs), Fully Homomorphic Encryption (FHE), Trusted Execution Environments (TEEs), and MPC. Finally, we analyse how Arcium leverages MPC to perform computations on encrypted data using blockchains like Solana.

ZKPs

Imagine you have a secret password to open a safe. You need to prove to someone that you know the password, but you don't want to tell them what it is. That's exactly what a Zero-Knowledge Proof does. It lets you prove you know something without revealing the actual information. Ethereum's Layer 2 solutions like Polygon zkEVM, StarkNet and ZKSync use ZKPs to verify thousands of transactions were processed correctly off-chain, without having to re-execute them all on mainnet. This enables massive scaling while ensuring security.

Here's a simple analogy. Consider a complex maze where you know the correct path to the exit. Instead of showing someone your map or giving them directions, you can prove you know the solution by walking through the maze multiple times. Each time, they drop you in, exit by getting lifted out and wait for you at the end. After several successful attempts, they become convinced you know the solution without ever learning the actual path you took.

In slightly more technical terms, ZKPs work by turning a statement into a mathematical equation that can be verified without revealing the underlying data. For example, instead of showing your actual age to prove you're over 18, you could use a ZKP to mathematically prove you meet the age requirement without revealing your birth date.

But, ZKPs do have limitations. They don’t work on encrypted data. It's important to note that while ZKPs keep the data private, the actual computation program itself remains visible. Only the inputs and outputs are protected. Whoever runs computations does it on decrypted data and then proves that they did so without revealing the data. So, they're excellent at proving statements about data, but they don't work well for shared systems like blockchains where multiple parties need to work with the same encrypted information simultaneously. It’s like having a group project where everyone needs to actively work on the same document. ZKPs can prove facts about the document, but they can't help everyone collaborate on it while keeping it private.

There is ongoing research into even more advanced techniques called indistinguishability obfuscation that could theoretically hide the program itself, but this remains a prospect in the distant future1.

FHE

A few months ago, I hosted Rand Hindi, the CEO of Zama Labs, on our podcast. Zama is championing FHE and how it can be easily utilised by developers. Sunscreen is another company building developer tools to make FHE more accessible. Founded in 2023, they've developed a compiler to help engineers build FHE programs and recently raised $4.65 million in seed funding led by Polychain Capital.

Before getting into how Zama plans to make FHE simple enough for developers to use, here is a short note on what FHE is. I’ve tried to use analogies so that it doesn’t get too technical. Imagine you have a magical lockbox that can perform math. Not only can you lock numbers inside it, but you can also add and multiply2 these locked numbers without ever opening the box. This is essentially what Fully Homomorphic Encryption (FHE) does. It lets you perform calculations on encrypted data while keeping it encrypted the entire time.

Here's a simple way to think about it. Let's say Sid and Shlok each have a secret number they want to add together, but they don't want anyone (not even the computer doing the calculation) to see their numbers. With FHE, it's like they each put their number in the special lockbox. They can add numbers inside the box without opening it. When they're done, only Sid and Shlok, who have the key, can unlock the final box to see the sum.

Getting a bit more technical, FHE works by converting regular numbers into mathematical structures called lattices. These lattices are like a complex grid of points in space. Your data gets transformed into coordinates on this grid in such a way that mathematical operations can still work on them. Mathematical operations can see them, but others can’t. The encryption ensures that even if someone sees these coordinates, they can't figure out the original numbers without the decryption key.

These magical lockboxes come with a significant challenge. They're incredibly slow to use. Even simple calculations like addition take much longer when performed on encrypted data.

The slowdown happens because of what cryptographers call "noise." Each time you perform an operation on encrypted data, small mathematical errors accumulate. Our lockbox gets cloudier and cloudier each time we use it. Eventually, it becomes so cloudy that we can't read the result anymore. To fix this, FHE needs to periodically "clean" the lockbox through a process called bootstrapping, which involves mathematical operations to refresh the encryption without revealing the data.

To put this in perspective, publicly available FHE systems have historically been reported to handle about 5 transactions per second. That's like having a calculator that takes 5 seconds to add two numbers together—far too slow for most real-world applications. The computational overhead is enormous. A simple multiplication operation in FHE might require millions of traditional computer operations to complete. While private implementations may have achieved better performance, comparing FHE systems is complex since their speed depends heavily on factors like the specific operations being performed, hardware optimisation, and implementation details.

While companies like Zama Labs and Fabric are working on making FHE faster through specialised hardware (like building better lockboxes), the fundamental limitations remain. It's a bit like trying to run through water. No matter how strong you are, the resistance of the water will always slow you down compared to running on land.

These limitations go beyond speed. FHE also struggles with operations that require comparing numbers or making decisions based on encrypted values. It's like trying to determine which of two numbers in locked boxes is larger without opening them. Technically possible, but extremely complicated and slow.

Zama’s technological advances have made FHE 100x faster and integrated it into existing developer tools, making it accessible to developers without needing a deep understanding of cryptography.

There is more to it, though. When you encrypt data to perform operations on it, its size increases. By 20 thousand times! A key breakthrough is Zama’s work in reducing the size of FHE-encrypted data. What used to expand data sizes by 20,000x has now been compressed to just 50x. But it is still not suitable for practical purposes. With more improvements coming, this can be brought down to 10x, making it viable for use on Ethereum and other blockchain platforms. Dedicated devices known as application-specific integrated circuits (ASICs) are already being manufactured to try to make FHE viable for real-world applications. But they are a few years away.

This is why many projects, including Arcium, have turned to alternative approaches like MPC and other forms of homomorphic encryption (Somewhat or Semi Homomorphic Encryption described later), which can achieve similar privacy guarantees while being thousands of times faster. Notably, even FHE systems ultimately require MPC protocols to reveal information — a step that's always necessary at some point for blockchain applications. By focusing directly on MPC and more targeted forms of homomorphic encryption, these projects can achieve the required functionality more efficiently. But I’m getting ahead of myself.

TEEs

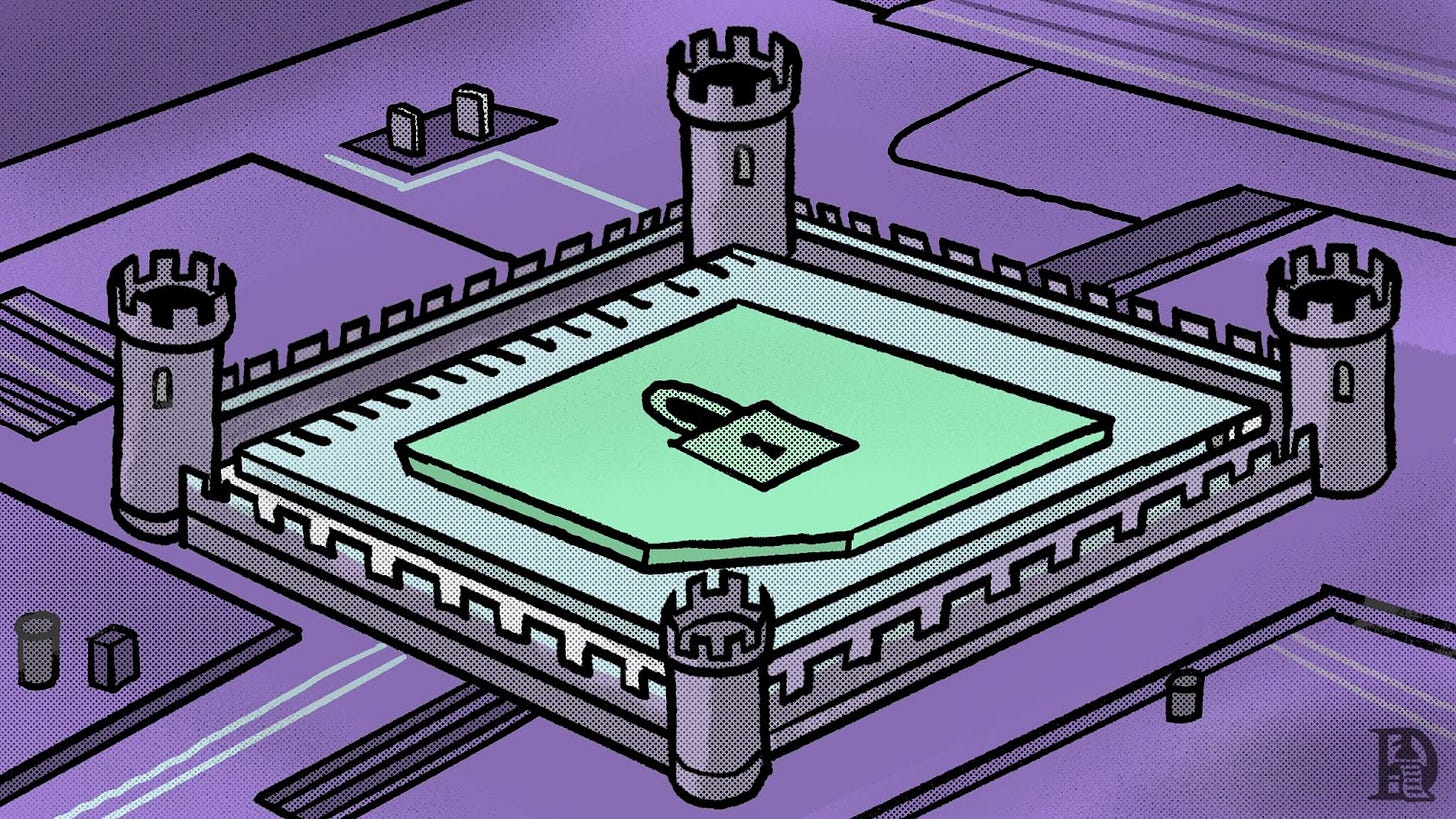

A Trusted Execution Environment is like a secure vault inside a computer's processor. If your computer is a busy office building, and the TEE is a special room with opaque walls where sensitive documents can be processed. Even if the rest of the building is compromised, what happens in this room stays private.

Intel is a manufacturer of TEEs used by services like Signal Messenger contact discovery. But, researchers have repeatedly demonstrated vulnerabilities and side-channel attacks against different TEE platforms, including Intel’s SGX, TDX, or AMD’s SEV processors. It indicates the vulnerability of hardware-based security.

Cloud providers like Microsoft Azure use TEEs to offer "confidential computing" services where customers can process sensitive data. But again, sophisticated attacks have proven successful against these environments, raising concerns about their reliability for highly sensitive applications.

Here's how it works: When data enters a TEE, it's decrypted and processed within this secure area. It's as if you have a guard checking everyone's ID at the door, ensuring only authorised code and data can enter. Once inside, the data is protected from other programs running on the same computer, including the operating system itself.

TEEs have a critical weakness—they're vulnerable to what's called "side-channel attacks." Using our office analogy, while the walls are opaque, clever attackers might still learn secrets by timing how long people spend in the room, monitoring power usage, or even listening to subtle sounds from the room. In practice, TEEs have been repeatedly compromised through these types of sophisticated attacks.

The Secret Network offers a sobering example of TEE vulnerabilities. In August 2022, researchers discovered two critical flaws (xAPIC and MMIO vulnerabilities) that could expose the network's "consensus seed" — essentially a master key capable of decrypting every private transaction ever made on the network. While fixes were implemented by October 2022, there was no way to confirm whether the vulnerabilities had already been exploited or if an attacker was eavesdropping in the future. Even well-meaning node operators might have unknowingly created conditions that could enable future attacks.

The incident highlighted a fundamental challenge with TEE-based systems: once compromised, not only are current data at risk, but all historical private transactions could potentially be exposed.

At the end of 2024, a major vulnerability in the AMD SVE family of TEEs was made public, which allowed attackers to perform data exfiltration for only $10 attack costs. This exploit clearly showed that attacks don’t require large budgets accessible only to state actors or large corporations. Not only Intel’s TEEs but any TEE is vulnerable to these kinds of attacks.

This discovery sends a clear warning that TEEs from any manufacturer might not be fully reliable when an attacker gains physical access to hardware. Allowing anyone to run a TEE node from their basement in distributed networks without additional cryptographic safeguards like secure Multi-Party Computation (MPC) will only lead to more exploits and attacks.

Besides side-channel attacks, another big problem associated with TEEs is their functional reliance on a proprietary, closed, and fully trusted supply chain. Within this supply chain, the manufacturers generate secret keys they fuse into chips, run attestation services, and upgrade the chip firmware. All this represents a supply chain consisting of single points of failure. This kind of reliance on trusted supply chains becomes critical when employing this technology in the decentralised setting as the Secret Network vulnerability strikingly highlighted.

This reality check has pushed many projects, including Arcium, to explore alternative approaches that don't solely rely on hardware-based security. Instead of trusting specialised hardware that might have hidden vulnerabilities, they've turned to cryptographic solutions that remain secure even if the underlying hardware is compromised. A secure system should not rely on TEE but can use TEE to further secure a cryptographic solution to the problem.

MPC

Remember the Kingdom of Veritasveil, where the King's advisors devised that clever solution with random numbers to calculate the realm's total wealth? That centuries-old riddle perfectly illustrates how MPC works today. Instead of nobles protecting their secrets, we have computers (called nodes) that split sensitive information into meaningless pieces. Like the nobles' scrambled numbers, these fragments reveal nothing on their own. But when the nodes work together, following precise mathematical rules, they can perform complex calculations while keeping the underlying data completely private.

But there's a catch. These nodes need to constantly communicate with each other to perform even simple calculations. As more nodes join the network, this chatter increases dramatically. It's rather like having to pass messages through every noble house in the kingdom—even basic arithmetic becomes quite elaborate.

Traditional MPC systems face another crucial challenge: they typically need most participants to be honest for the system to work properly. This is similar to how blockchain networks like Bitcoin or Ethereum require a majority of validators to be honest to maintain network security—a concept known as Byzantine Fault Tolerance (BFT). Some more secure versions can function even if only one participant is honest. This is a system that stays secure even if 99 out of 100 participants are compromised.

Modern MPC implementations include redundancy measures to ensure that no single participant can halt computations by going offline. These systems still face challenges when participants actively try to disrupt computations by submitting incorrect data. The system can't efficiently filter out bad actors without a way to identify which participants are acting maliciously. This is akin to our nobles from Veritasveil deliberately submitting wrong numbers to corrupt the total wealth calculation—i.e., while the system could detect something was wrong, it couldn't pinpoint the culprit.

This inability to identify and remove malicious actors has historically limited the practical deployment of dishonest majority systems, despite their superior privacy guarantees. This is where Arcium's ability to identify cheaters cryptographically becomes crucial, as we'll explore in the next section.

Rethinking Confidential Compute from First Principles

While technologies like FHE, TEEs and ZKPs advance private (or confidential)3 computing in their own ways, each faces fundamental limitations. FHE suffers from computational overhead that makes it impractical for most real-world applications. TEEs, despite their efficiency, have proven vulnerable to sophisticated side-channel attacks. And ZKPs, while excellent for proving computations, aren't suited for shared state systems where multiple parties need to work with encrypted data simultaneously.

This is where Arcium enters the picture, reimagining private computing from first principles. Rather than accepting traditional trade-offs between security, scalability and trust assumptions, Arcium has developed an architecture that aims to overcome these fundamental challenges.

At the heart of Arcium's design is a breakthrough in how Multi-Party Computation can be implemented. Traditional MPC systems typically require most participants to be honest for the system to work reliably. Even more secure versions that could theoretically function with just one honest party are vulnerable to disruption. Any participant could derail computations by going offline or sending incorrect data.

Arcium's innovation lies in combining a dishonest majority protocol with the ability to cryptographically identify malicious actors. As CEO Yannik Schrade explains, "dishonest majority protocols normally allow for censorship and high DDoS potential because one bad actor could just cause the computation to fail. We overcome this through a cheater-identification-protocol that generates cryptographic proof of misbehaviour, submitted to a smart contract which then punishes the malicious node through slashing."

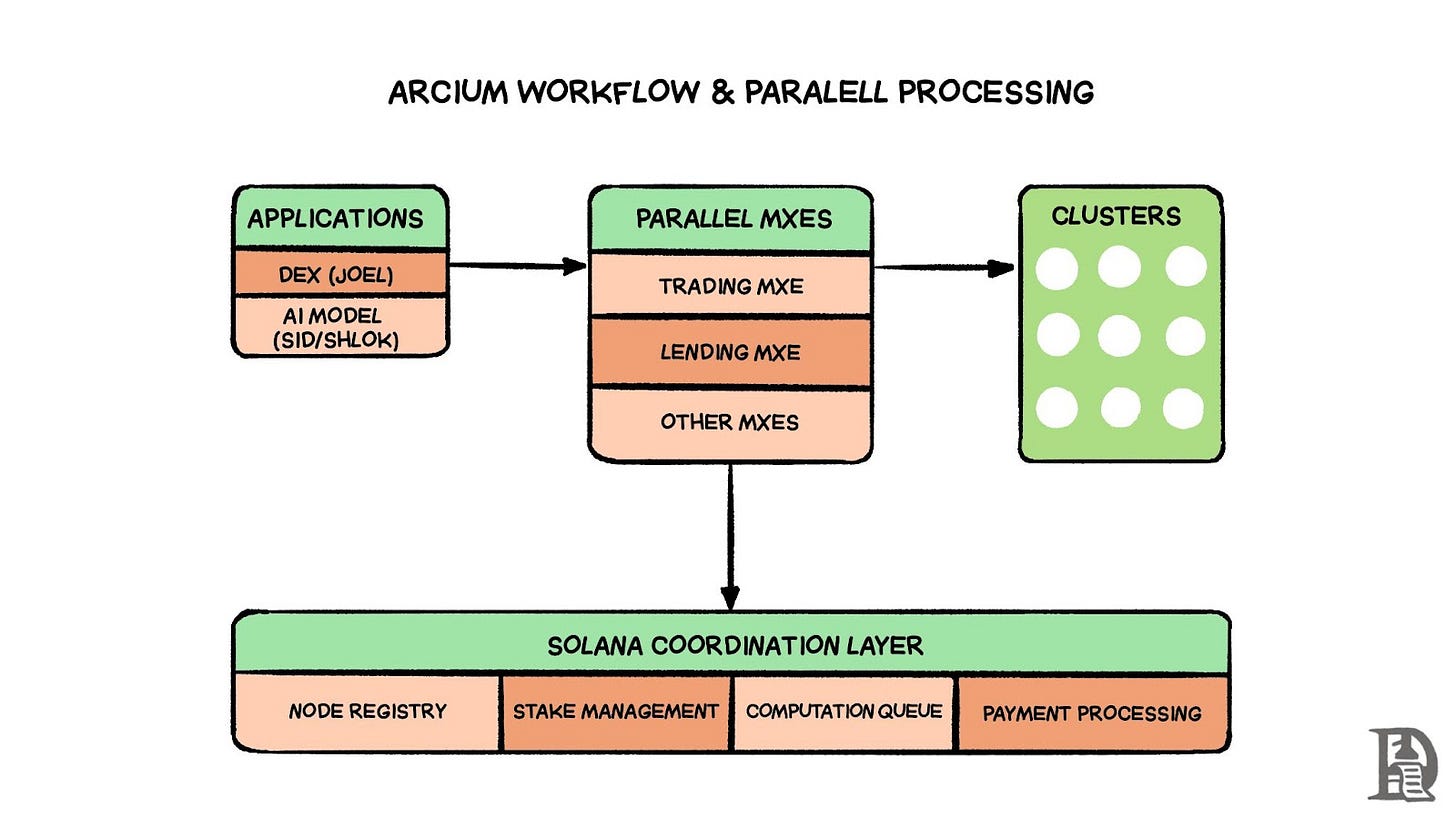

This architectural approach creates powerful incentives that align node operators' economic interests with honest behaviour. Just as importantly, it enables true parallelisation of confidential computations through dedicated MPC environments called MXEs (Multi-Party eXecution Environments). Rather than forcing all computations through a single pipeline, Arcium's design allows multiple independent computation clusters to operate simultaneously.

But before diving into the specifics of how Arcium achieves this, let's examine the key building blocks that make this architecture possible.

The Building Blocks of Arcium

Think of Arcium as a distributed supercomputer designed specifically for encrypted computations. Just as a traditional supercomputer has processors, memory, and an operating system, Arcium has its own specialised components working in concert.

Multi-Party eXecution Environments (MXEs)

At the heart of Arcium's architecture are MXEs—dedicated environments for secure Multi-Party Computation. An MXE is like a secure virtual machine that defines how encrypted computations should be executed. When developers want to run confidential computations, they first create an MXE specifying their security requirements and computational needs.

MXEs are highly configurable, allowing developers to define everything from their security model to how data should be handled and processed. A developer might require TEE-enabled nodes for additional security or specify particular data handling parameters based on their application's needs. What makes MXEs particularly powerful is their ability to operate independently and in parallel. Unlike traditional MPC systems that force all computations through a single pipeline, MXEs can run concurrent computations across different clusters of nodes.

Clusters: The Computational Backbone

If MXEs define how computations should run, Clusters determine where they run. A Cluster is a group of Arx nodes (individual computing units) that collectively execute Multi-Party Computations.

What makes Arcium's Cluster design unique is its flexibility. Computation customers can create either permissioned or non-permissioned setups, selecting specific nodes based on their reputation and capabilities. The design also accounts for practical concerns like computational load requirements and fault tolerance through backup nodes.

Importantly, Clusters enforce Arcium's security model. Even if most nodes in a Cluster become compromised, as long as one honest node remains, the privacy of computations is preserved. The system's ability to identify and punish malicious behaviour through cryptographic proofs makes this security model practical.

Arx Nodes: The Computing Units

Arx nodes are the individual computers that make up Arcium's network. Each node contributes computational resources and participates in Multi-Party Computations. The name "Arx" comes from the Latin word for fortress, reflecting each node's role in securing the network.

To ensure reliable performance and security, nodes must meet specific hardware requirements and maintain stake (collateral) proportional to their computational capability claims. The higher their share of total compute, the greater their responsibility. The stake should ideally be higher than the potential gain achieved by cheating the system. This creates strong economic incentives for honest behaviours. Nodes that attempt to cheat or fail to perform their duties face slashing penalties.

arxOS: The Execution Engine

Tying everything together is arxOS, Arcium's distributed, encrypted operating system. It coordinates how computations flow through the network, managing computation scheduling, node coordination, resource allocation, and state management. arxOS ensures that despite being distributed across many nodes, the system operates cohesively while maintaining security guarantees.

State management within arxOS is performed in multiple ways. arxOS supports computation inputs and outputs as either plaintext or ciphertext. Persistent on-chain data can be directly passed as inputs into computations, with mutated results being written back to the chain with the finalisation of a computation.

Due to transaction size limitations of on-chain operations, for tasks with large volumes of input/output data (exceeding these limitations), off-chain data management can be used (to source from, and write to). This flexibility is significant, allowing developers the option to design applications (with lower-volume data requirements) that integrate directly with smart contract environments, as well as those that opt for off-chain management of persistent state.

Inside Arcium

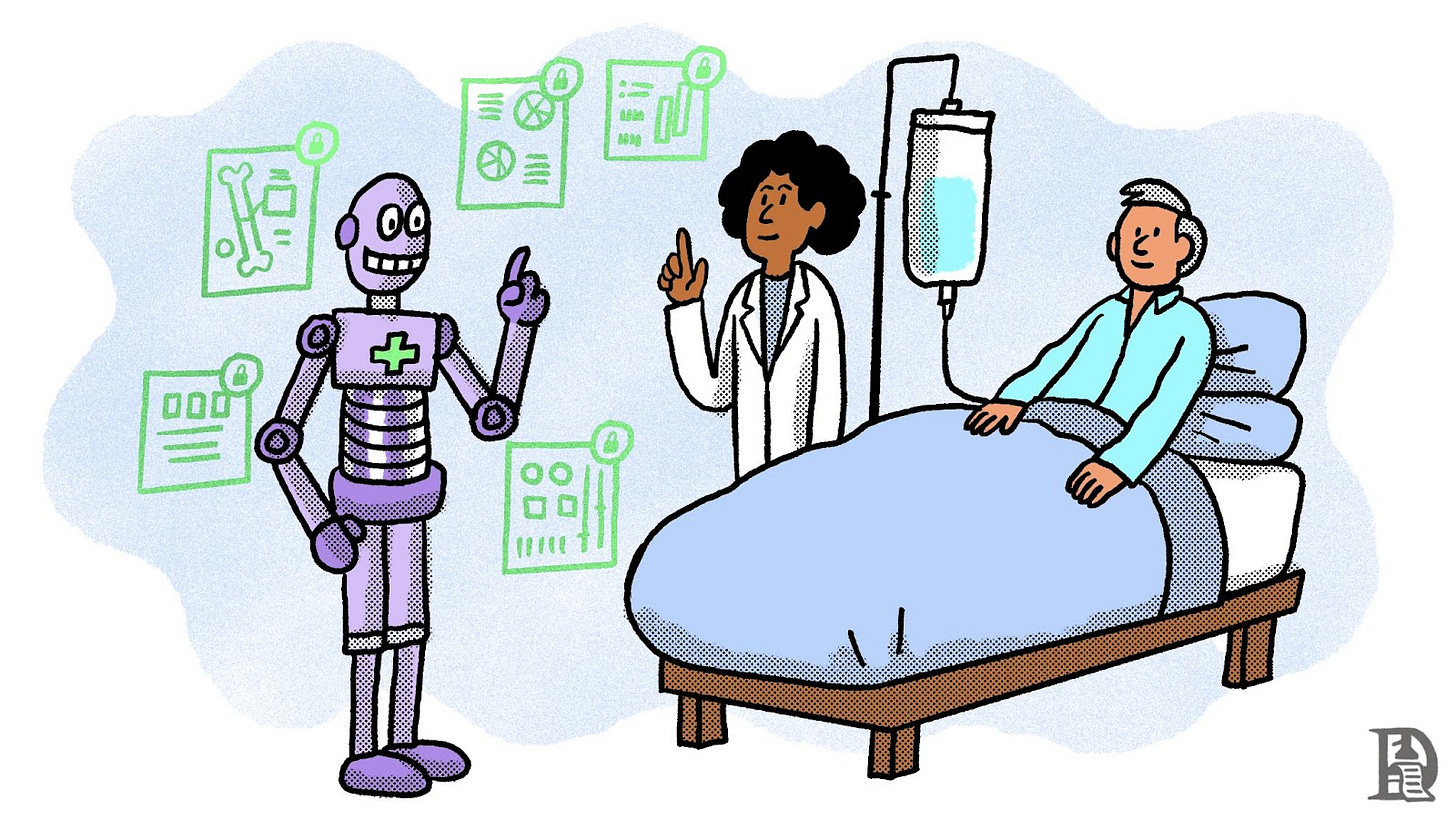

Imagine a global research project where multiple pharmaceutical companies want to combine their proprietary drug development data to find a cure for a disease. Each company has valuable research that could help, but none want to reveal their secrets to competitors. Traditional approaches would require everyone to trust a central laboratory with their data. For most companies, this is an unacceptable risk.

What if, instead, these companies could collaborate without ever revealing their individual research? Better yet, what if they could run complex analyses on their combined data while keeping each company's contribution completely private? This is essentially what Arcium is building towards. A system where multiple parties can not only store sensitive data securely but also perform complex computations on it without ever decrypting or revealing the underlying information.

The challenge is keeping secrets and actively working with them.

Redefining Trust in the Digital Age

When it comes to keeping data private, Arcium's system remains confidential as long as just one participant stays honest, even if everyone else tries to peek at the encrypted data. It's like having a hundred people guard a secret, where a single trustworthy guard is enough to keep it safe.

Please note that there's an important distinction here. While privacy requires just one honest participant, actually performing computations requires everyone to work together. As Arcium's CEO explains, "We just require the honesty of one participant to have confidentiality. But in order to produce an output, we need all (or a certain threshold of) nodes in a cluster to work together." It’s like a complex orchestral performance—while one person can keep the musical score secret, you need all musicians to play for the symphony to come alive.

This creates an interesting challenge: how do you ensure nodes actually perform their roles as they should? When a node misbehaves, either by going offline or submitting incorrect data (for example, by flipping even a single bit in their portion of the encrypted calculation), the computation will fail rather than produce incorrect results. When this happens, the system generates cryptographic proof identifying exactly which node caused the disruption. This proof is like a digital surveillance camera catching someone in the act. It's submitted to smart contracts on Solana, which automatically enforce penalties by slashing the node's staked collateral. This is a practical solution to the node accountability problem.

From Request to Reality: How Arcium Works

Let's follow Joel, a developer building a decentralised exchange (DEX). He wants to prevent front-running by keeping order flow private until execution. Meanwhile, Sid and Shlok are data scientists looking to train AI models collaboratively while keeping their training data confidential. Their journeys with Arcium showcase how the system handles different confidential computing needs.

First, they each need to set up their secure computation environments, or MXEs. It is like renting a high-security building for your operations. Through Arcium's smart contracts on Solana, Joel specifies his security requirements for handling trade data, while Sid and Shlok configure their MXE for processing large AI datasets. These MXEs can operate independently and simultaneously, rather than forcing all computations through a single pipeline.

Next, they need workers to staff their secure facilities. This is where Clusters come in. A Cluster is a group of Arx nodes (individual computers) that will execute confidential computations. Joel creates a new Cluster optimised for processing trading data quickly, while Sid and Shlok choose an existing Cluster with powerful computational capabilities suited for AI workloads.

What's interesting is that Arx nodes must actively choose to join these Clusters. Each node independently evaluates the opportunity, considering factors like computational requirements and the reputation of other nodes involved. It's like skilled workers deciding whether to take a job based on the work environment and their potential colleagues.

Once their environments are ready, implementing confidentiality becomes remarkably simple. Joel marks his order-matching logic as confidential, while Sid and Shlok designate their model training functions for secure computation. It's just a single line of code placed before functions or data structures they want to keep private. Behind the scenes, Arcium’s compiler handles the complex work of converting these elements into secure MPC operations.

When users interact with Joel's exchange or when Sid and Shlok begin their model training, their confidential computation requests flow through several stages. First, they're verified by Arcium's smart contracts on Solana. Think of this as checking credentials at the security desk. The Cluster's Arx nodes then retrieve the encrypted inputs and begin their carefully choreographed MPC protocol, coordinated by arxOS.

Scaling Through Parallelisation

Arcium has the ability to handle any number of computations simultaneously. For example, if MXE-1 runs relatively simpler computations compared to MXE-2, it can run 100 computations per second. This has no bearing on MXE-2, which runs complex computations at 5 per second. Unlike Layer 1 blockchains, where high activity from one protocol (like an NFT mint) can cause network-wide congestion and make other protocols economically unviable due to gas spikes, Arcium's MXEs operate independently. So, while Joel's DEX is processing encrypted trade orders, Sid and Shlok's AI training can run independently on different Clusters. This true parallelisation extends beyond just running separate MXEs simultaneously.

Even within complex computations, Arcium can handle both independent operations and those requiring sequential analysis in parallel. For instance, in Joel's exchange, while some nodes process new order encryptions, others could be handling token balance verifications. This hybrid approach enables the system to maintain both security and efficiency at scale.

The Blockchain Foundation

Arcium uses Solana as its coordination layer, much like a city's infrastructure supports its businesses. Solana handles essential functions like registering nodes, managing stake, scheduling computations, and processing payments. While Arcium itself remains stateless — focused purely on executing confidential computations without permanent state storage — it can seamlessly work with both on-chain and off-chain state.

This modular design achieves two key benefits. First, it allows Arcium to potentially connect with other blockchain networks while maintaining its security guarantees. Second, by managing state primarily through on-chain coordination, it gives nodes a single source of truth without requiring additional consensus mechanisms among themselves. The result is a highly scalable system where confidential computations can be processed efficiently, independent of the underlying state management.

This architecture represents a shift in how we think about confidential computing. Rather than trying to make single computers more secure through hardware solutions like TEEs, or accepting the massive performance penalties of FHE, Arcium creates a network where security emerges from the interaction of many participants.

What is different about Arcium?

When Sid and Shlok train their AI model on Arcium, their data remains encrypted while complex mathematical operations crunch through neural network calculations. Arcium makes this possible through several technical innovations.

The Right Tool for the Right Job

While recent advances in FHE have garnered attention, Arcium takes a more pragmatic approach by using Somewhat Homomorphic Encryption (SHE).

We only use additive homomorphisms and we don't directly use any homomorphic multiplications. What we do instead for multiplication is utilise correlated randomness.

An example of this is how different stocks might seem to move up and down randomly, but many stocks actually move together because they're affected by the same economic factors, like interest rates or oil prices.

So, while each individual event might look random on its own, there's an underlying connection that makes them move in similar ways. It's like invisible strings connecting things that otherwise appear to be completely independent and random.

It is comparable to building a railway system. FHE is like attempting to drive a train on a highway. If you build special adaptions, it’s possible but impractical, inefficient, and misaligned with the infrastructure's purpose. SHE, as implemented by Arcium, is like building specialised tracks for specific types of trains. By optimising for the most common journeys (addition operations) and creating efficient transfer stations for others (multiplication through correlated randomness), the system achieves better performance at a fraction of the cost.

This approach uses what cryptographers call "beaver triples", precomputed values that make multiplication operations efficient. It's like having transfer stations strategically placed along the railway, where cargo can be quickly moved between trains without lengthy delays.

Correlated randomness is two random values multiplied together equals some product. That is generated in a trustless pre-processing step by the nodes.

The results speak for themselves. In many benchmarks4, even with Arcium running computations across multiple nodes, they achieve speeds up to 10,000 times faster than FHE solutions. While FHE might seem like the more complete solution on paper, Arcium's practical approach proves that sometimes specialisation beats generalisation.

Breaking the Speed Barrier

Performance in MPC systems has traditionally been a significant bottleneck. These systems are often slow because they need to process everything in sequence and communicate extensively between parties. Arcium has developed several optimisations with the help of in-house developments and the acquisition of Inpher, a confidential computing company backed by JP Morgan. The acquisition allowed Arcium to gain access to technology that directly addresses these fundamental challenges.

They've optimised their cryptographic operations to use existing hardware acceleration for curve25519 computations efficiently. It's like having a specialised graphics card for gaming. But in this case, it's optimised for the specific mathematical operations that power their secure computations. Through clever software optimisations that minimise data movement and enable parallel processing, early testing suggests this could yield up to 10x performance improvements for cryptographic operations.

Integrating Inpher's technology—developed over nearly a decade and battle-tested in demanding enterprise environments— introduces crucial optimisations to address these challenges. At its technical core, this includes an enhanced compiler that removes unnecessary computations, faster ways to perform basic mathematical operations, and specialised hardware acceleration that speeds up complex calculations.

What makes this integration powerful is how the system handles different types of calculations. The architecture can dynamically switch between different processing modes — scalar, boolean and elliptic curve — depending on the specific needs of each computation. I think of it like having an electric screwdriver that changes heads based on the size of the screw.

This versatility enables practical machine-learning applications that were previously impractical in encrypted environments. For example, you need to preprocess data, evaluate model performance with various metrics, and understand how the model makes decisions. The system now handles all of these tasks—from basic statistical operations to advanced algorithms—all the while keeping the data encrypted throughout the process.

Critically, this performance improvement doesn't come at the cost of developer accessibility. By merging Inpher's Python framework directly into Arcis compiler, developers can use familiar tools and workflows to build privacy-preserving AI applications. So, Sid and Shlok can write their AI models in Python using familiar libraries like PyTorch or TensorFlow, and Arcis seamlessly handles the integration with Solana and the encryption protocols.

The system intelligently manages the complexity of switching between encrypted and unencrypted operations, allowing developers to focus on solving problems rather than managing encryption protocols.

The Cost of Confidentiality

Confidential computing has traditionally come with a significant trade-off: security often means sacrificing speed and efficiency. Existing solutions—whether FHE, MPC, or TEE—impose substantial computational overhead, making large-scale adoption challenging.

Arcium aims to rewrite this equation by optimising secure computation at multiple levels. Its architecture, i.e., leveraging a parallelised MPC framework, reduces the inefficiencies typically associated with privacy-preserving computation. Unlike FHE, which suffers from extreme latency due to complex mathematical operations, Arcium’s system achieves near real-time execution, making confidential on-chain applications viable.

An early benchmark of Arcium’s Cerberus MPC protocol, compared to the most efficient open-source FHE libraries for Multi-Scalar Multiplication (MSM), tested two-party computations on machines with 8GB RAM (AMD EPYC-Milan). The results showed Cerberus was 10,000 to 30,000 times faster than FHE. Crucially, this measurement includes both the preprocessing and online phases. Since preprocessing—typically the most computationally expensive step—can be performed in advance, the actual computation runs at a fraction of this already accelerated runtime.

The implications extend beyond DeFi. AI models trained on encrypted data can now process large datasets without incurring prohibitive computational costs. Institutional investors can execute large trades without leaking market signals. Businesses can collaborate on shared datasets while preserving competitive secrecy.

By addressing both the computational and economic challenges of confidential computing, Arcium makes privacy-preserving computation not just theoretically possible, but practical at scale.

Navigating Obstacles in Confidential Compute

While Arcium advances confidential computing, key challenges remain, particularly in data handling, efficiency, and protocol flexibility.

The Input/Output Challenge

One of the biggest bottlenecks in Multi-Party Computation (MPC) is managing input and output (I/O) efficiently. As Yannik explains, "Limitations around I/O make it challenging to handle large-scale transactions." More nodes mean increased communication overhead, creating potential bottlenecks.

For example, hospitals collaborating on an AI model for disease detection must provide encrypted data without revealing patient information. The challenge is ensuring seamless data transmission along with encryption. Arcium’s MXE architecture is designed to optimise this process, but further improvements are needed to scale secure collaboration.

Building Oblivious Data Structures

Perhaps the most exciting development on Arcium's horizon is the implementation of oblivious data structures that allow data access without revealing which specific piece is being retrieved. Current systems require scanning all data, making lookups inefficient.

Arcium aims to enable constant-time reads and writes to encrypted data. Imagine a vast library where every book is locked in an opaque safe. Normally, finding a specific book would require unlocking and checking every safe one by one—an inefficient and time-consuming process. Arcium’s innovation is like equipping each book with a coded retrieval mechanism, allowing you to summon the right one instantly without revealing its location or contents.

This breakthrough also paves the way for sorted encrypted datasets and the construction of Oblivious RAM (ORAM) for confidential computing at scale. Just as a librarian can efficiently organise books without knowing their content, Arcium enables structured access to encrypted data, ensuring speed and privacy without compromise.

Once implemented, this will enable:

Constant-time reads and writes to encrypted data: Critical for applications like Joel’s DEX, where encrypted order book access must be fast.

Efficient sorting of private information: Essential for AI training, enabling rapid dataset traversal.

Encrypted hash maps and trees: Enhancing performance in large-scale confidential computations.

For DeFi and AI applications, these improvements will unlock faster execution, enabling privacy-preserving transactions and AI model training without compromising efficiency.

Multi-Protocol Support

Arcium currently uses the BDOZ protocol, a system that ensures data privacy even if only one participant remains honest. The protocol works in two key ways:

First, it splits data into encrypted shares among participants, with security checks (MACs) preventing tampering. No single party can see the complete data, but together they can perform computations on it securely.

Second, BDOZ divides work into two key stages: a preprocessing phase and an online computation phase. During preprocessing, the nodes generate "randomised raw materials" like multiplication triples that will be needed later for secure computations, but are independent of the actual input data. For example, if nodes need to multiply encrypted values during the computation, they prepare special random values ahead of time that make those multiplications much faster when the real data arrives.

When the actual private input data comes in during the online phase, the nodes can leverage these preprocessed materials to perform calculations efficiently. It is akin to a restaurant kitchen preparing ingredients and sauces before service starts. When orders come in, dishes can be assembled quickly thanks to the prep work done earlier. The preprocessed values act as cryptographic building blocks that speed up operations on the encrypted data without revealing anything about the inputs themselves.

Real-World Possibilities

The potential of Arcium's technology becomes clear when we examine how it could transform different industries. While the underlying technical challenges are complex, the real-world applications are surprisingly tangible.

Revolutionising AI Development

Sid and Shlok’s AI experimentation highlights how privacy-preserving AI could transform healthcare. Hospitals hold vast patient data that could improve disease diagnosis, but strict regulations make collaborative research difficult.

A real-world example is the UK’s NHS, which partnered with Google DeepMind in 2017 to develop AI for detecting kidney injury. The project was later ruled unlawful for mishandling 1.6 million patient records without proper consent. This underscores the challenge: AI models need both privacy and explainability—doctors and regulators must understand why a model makes specific predictions.

Need for Transparency

The risks of opaque AI decision-making are evident in crypto. Andy Ayrey created Truth Terminal, an autonomous AI Twitter account. Marc Andreessen sent Truth Terminal $50,000 in Bitcoin after seeing its response about what it would do with $5 million. Truth Terminal got sent several tokens to its blockchain addresses. AI picked up $GOAT, and we still don't fully understand why. Even more remarkably, this AI managed to convince humans to invest in what was essentially a random token, driving its market cap to an astonishing $1 billion.

The fact that we don’t fully understand why the AI fixated on $GOAT underscores the dangers of unexplained AI-driven decisions. If AI can manipulate financial markets unpredictably, its role in healthcare, finance, and governance needs strict oversight and verifiable explanations.

The Role of XorSHAP

Arcium’s XorSHAP technology addresses this by making decision tree models both private and interpretable. Traditional deep learning models act as "black boxes," whereas XorSHAP allows privacy-preserving feature attribution, showing which factors influenced a diagnosis without revealing patient data.

For example, a hospital AI system might evaluate age, blood pressure, test results, and family history. XorSHAP can reveal that blood test abnormalities contributed 60%, family history 25%, and age 15% to a diagnosis, without exposing raw patient data. This ensures regulatory compliance while making AI decisions transparent and actionable.

XorSHAP is not a silver bullet by any means. Its effectiveness depends on decision tree-based models, which are well-suited for structured, rule-based decisions but may not capture complex patterns as effectively as deep learning methods. While decision trees offer explainability, they trade off in predictive power compared to more sophisticated AI architectures.

Practical AI Explainability

Arcium’s system can explain multiple AI predictions simultaneously, allowing hospitals to gain real-time insights while preserving confidentiality. AI models trained on combined but encrypted datasets can identify rare diseases without exposing individual patient records.

Each hospital retains full control of its data while contributing to a shared, more robust model. Even model weights remain encrypted during updates. This approach enables privacy-compliant AI innovation, particularly in regulated industries like healthcare.

Leveraging data from the Stanford AI Index, which highlights increasing regulatory pressure on AI systems, Arcium ensures both transparency and compliance with evolving standards. By combining privacy-preserving computation with model explainability, it becomes a key enabler of secure and trustworthy AI innovation.

Unsurprisingly, no single method solves all AI challenges—XorSHAP provides a strong foundation for decision tree models, but broader AI explainability in confidential computing will require continued innovation.

Each hospital retains full control over its patient data, yet the AI model benefits from collective knowledge across institutions. Training occurs on encrypted data, with even the model weights remaining private. This approach makes it possible to analyse rare medical conditions, where data is scarce in individual hospitals but becomes statistically significant when aggregated securely.

AI has dominated the tech landscape in recent years, shaping industries and sparking debates—including Ben Affleck’s take on the subject.

Transforming DeFi

Joel's DEX illustrates how Arcium could fundamentally change how we think about market transparency. Currently, DeFi faces a paradox—while blockchain ensures transaction transparency, this very transparency enables harmful practices like front-running and sandwich attacks.

One prominent example of this is MEV (Miner Extractable Value), where validators or miners take advantage of transaction order flow to maximise their profits. Recently, Jito validator tips even eclipsed Solana's transaction fees, highlighting just how much profit there is to be made from MEV. The rise of MEV has introduced significant inefficiencies and risks to blockchain ecosystems, often to the detriment of regular users. As the activity on Solana surged, so has MEV. Currently, Jito Validators have an annualised run rate of $146 million. This can be seen as a proxy for MEV on Solana.

Arcium eliminates this issue by encrypting order flow. Traders submit orders privately, with details revealed only at settlement. This prevents MEV, ensuring fair execution without leaking market signals. If such technology removes MEV opportunities, protocols like Jito may need to pivot or find alternative revenue models.

The impact extends beyond trading. Flash loans, cross-chain swaps, and institutional portfolio rebalancing could occur confidentially, shielding strategies from market manipulation. Arcium can enable secure, private execution at scale, levelling the playing field for all participants.

Beyond Blockchains

Arcium’s approach can enable privacy-preserving data collaboration across industries. Banks could detect fraud in real-time without exposing customer data, while manufacturers could coordinate supply chains without revealing proprietary details. A carmaker, for instance, could manage deliveries across multiple suppliers while keeping operations confidential.

Its design stands out for its practicality—requiring just one honest participant to ensure privacy and using parallel computation to make secure processing more efficient. For decision tree models, XorSHAP offers insight into feature importance, though broader AI explainability remains an ongoing challenge.

From Veritasveil’s nobles to modern dark pool users, organisations have long sought ways to collaborate without compromising sensitive information. As privacy regulations tighten and AI becomes more embedded in critical systems, solutions like Arcium present a possible path forward. But a few key questions remain: How well will it handle real-world workloads? Will developers adopt it despite its learning curve? Can it balance security, performance, and usability in practice?

While Arcium offers a promising approach, it is not yet live. Its effectiveness will ultimately depend on adoption and real-world performance. Whether it turns confidential computation into a practical reality remains to be seen.

Enjoying the new home office,

Saurabh Deshpande

Disclaimer: DCo members may have positions in assets mentioned in the article. No part of the article is financial or legal advice.

Multi-Party Computation (MPC) can achieve similar outcomes today using oblivious data structures and Oblivious RAM (ORAM). These allow computations on encrypted data without revealing which parts of the data are being accessed or modified, preventing side-channel leaks. While not a complete substitute for obfuscation, they offer practical confidentiality in shared computing environments, making it possible to perform operations without exposing the underlying logic or memory access patterns.

When you have the means to add and multiply, you can easily subtract and divide using negative numbers and reciprocals, respectively.

Throughout this article, we use “private computing” and “confidential computing” interchangeably.

The comparison uses tFHE-rs 0.7.3 and Arcium’s closed source Cerberus for MSM. Time for Cerberus includes a preprocessing and online phase for two parties. Machines: 8GB of RAM, AMD EPYC-Milan.

If you liked reading this, check these out next:

- Ep 30- The Encrypted Supercomputer with Yannik from Arcium

- Ep 20 - On FHE, Zama, and New Internet Standard